Guides

Comprehensive Guide to RAG for Product Managers

Building AI products? This comprehensive guide covers everything you need to know about retrieval augmented generation (RAG).

Brian Yam

,

Head of Marketing

18

mins to read

Introduction

Every B2B product is becoming AI-enabled at lightning pace, whether we like it or not. As a product leader, whether you're building new customer-facing AI features such as an AI chatbot, or whether you’re integrating AI functionality into different components of your existing product, understanding how to leverage AI will be a critical skillset.

But it’s not as simple as ‘sprinkling in some large language models (LLMs)’, because these LLMs, like GPT or Claude, only have awareness of their training data and whatever the user (or your backend logic) includes in the prompt.

This creates three major challenges.

The training data is usually a few months or even years old – your chatbot won’t know about the latest developments in your market nor your product

The knowledge is generic – it doesn't have access to your customers’ proprietary data (ie. knowledge bases or sales data)

It’s impractical to include everything in the prompt - you can’t expect your users to upload dozens of files and paragraphs of context for every question

This is where Retrieval-Augmented Generation (RAG) comes in. Think of RAG as giving your AI product access to your customers’ data, both from within your app and external files/apps as well. Just like how a new employee would search through your documentation to answer customer questions, RAG enables your AI to do the same thing automatically.

RAG vs Fine-tuning

Before we get into the specifics of RAG, it’s important to distinguish RAG from fine-tuning. You may have seen a lot of companies promote the fact that they don’t train their models with users’ data, yet they have integrations with all of their users’ external applications. This is because they’re leveraging RAG, not fine-tuning.

I wrote an in-depth comparison on the differences between RAG and fine-tuning here, but long story short, RAG is going to be much easier and quicker to implement and supports real-time updates to users’ data.

Real-World RAG SaaS Applications

To help illustrate how RAG works, I thought it’d be best to use a few real-world examples of AI products/features from well known SaaS companies.

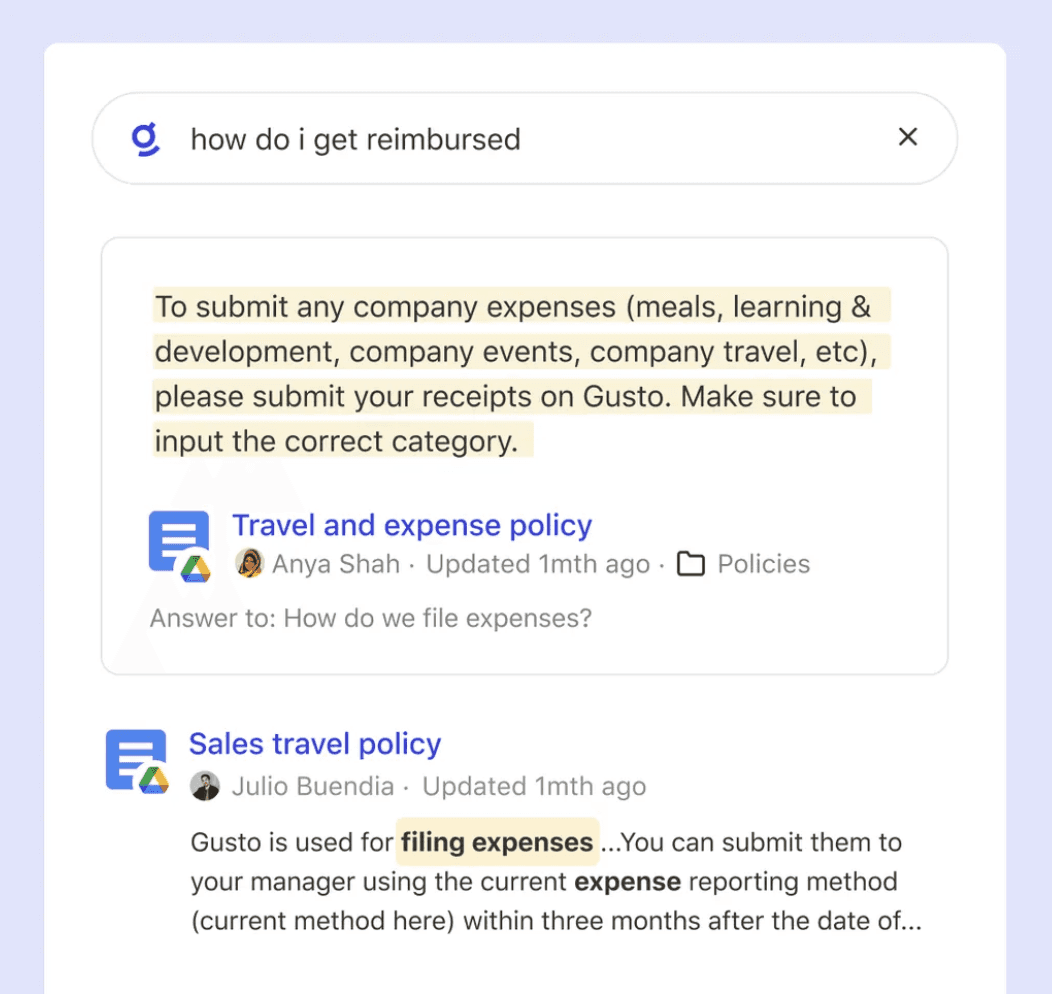

Glean: Enterprise Search and Knowledge Management

Glean ingests all of their users’ external data and files (emails, Slack messages, OneDrive files, Jira tickets etc.), and surfaces relevant data through a simple search interface.

For example, when a user asks Glean "What's our return-to-office policy?", it automatically:

Searches across all of the user’s data from connected systems

Finds relevant information from HR policies, executive announcements, and team discussions

Passes all of that information, alongside the user’s question, to the underlying LLM

The LLM synthesizes this information into a clear answer

That answer is then provided to the user, alongside links to source documents for verification

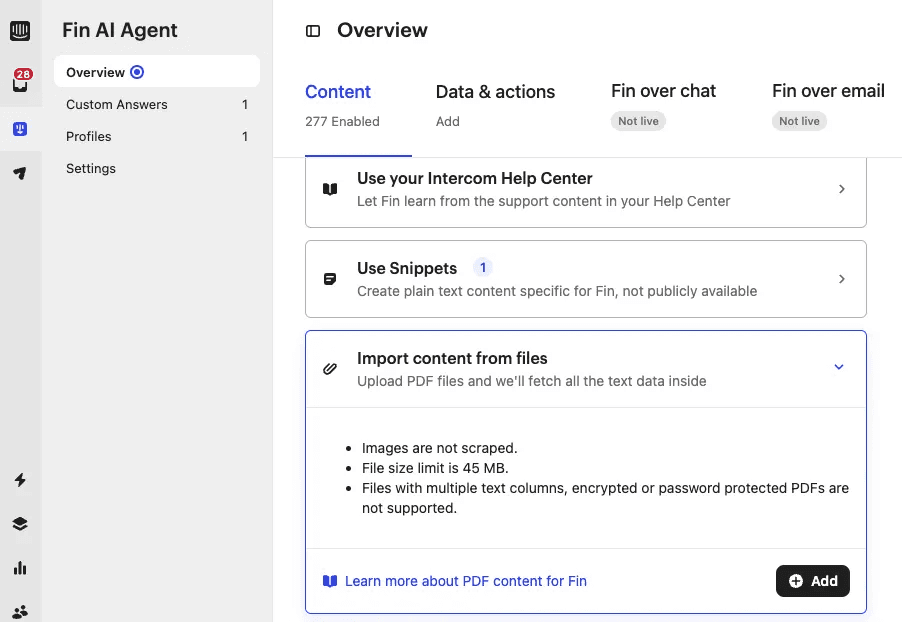

Intercom Fin: Customer Support Automation

Intercom’s Fin product is an AI support agent product. While traditional chatbots required manually programming ‘branched’ responses to every possible question (which is why they’re often useless, as not all questions can be anticipated), Fin does not need pre-defined branches for Q&A.

For example, when an end-user asks "How do I set up the Salesforce integration?", Fin will:

Search and retrieve relevant data from the company’s external sources (documentation, knowledge base, etc.)

Check the end-user’s plan in Salesforce to make sure their plan includes the Salesforce integration

Send all that data, alongside the end-user’s question, to the underlying LLM

Provide a personalized response like "With your Enterprise plan, here are the steps to integrate your Salesforce account. Here's how..."

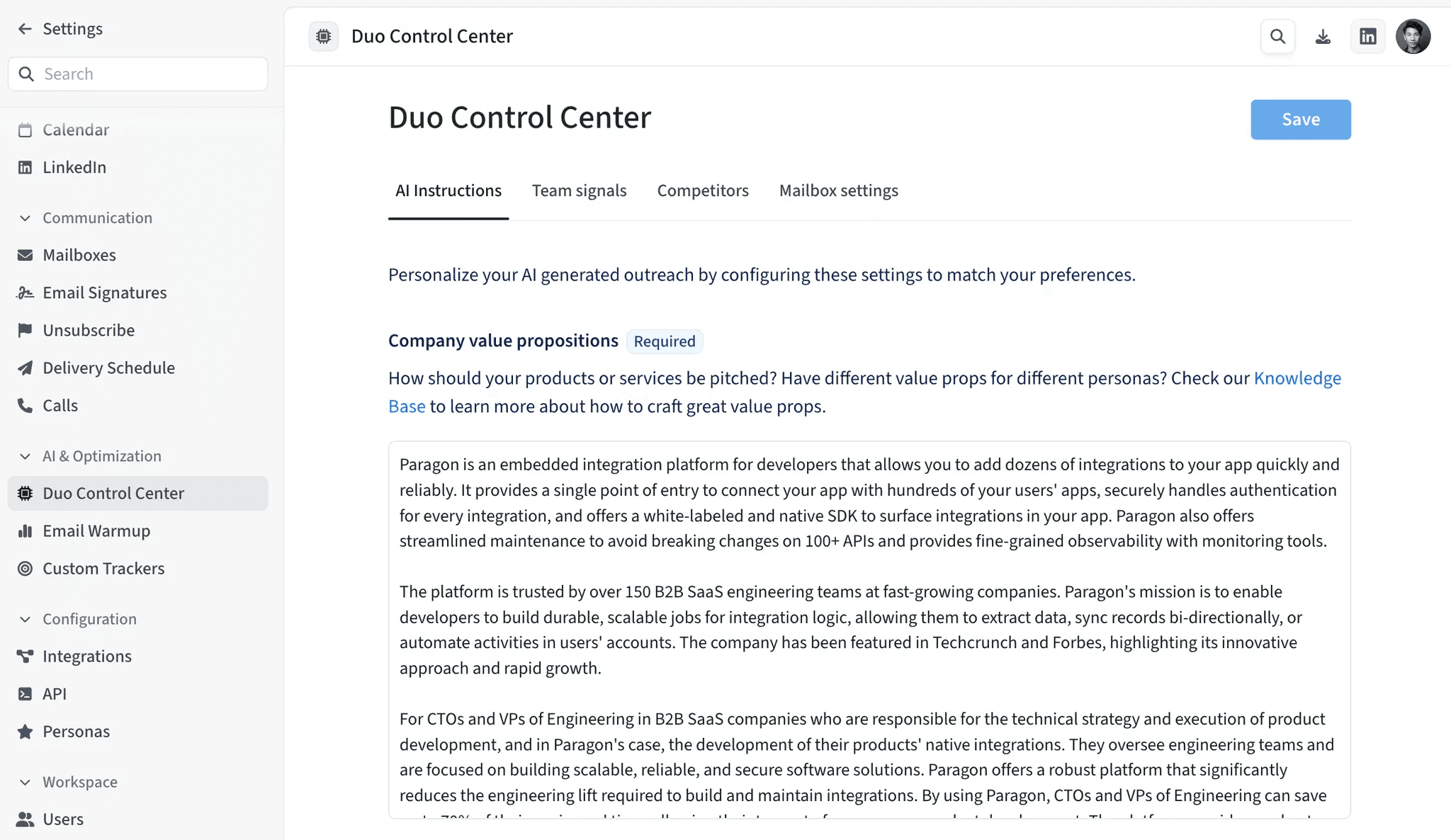

Amplemarket: Sales Engagement Tools

Amplemarket is an email outreach product that can auto-generate sales emails with AI. In order to generate these personalized emails, they require customers to provide:

details about their ideal customer profile

details about the product they are selling

case studies and other resources

This serves as the ‘context’ that will be retrieved via RAG to generate emails that are relevant to each of their customers, and tailored towards the prospect that the email is going out to.

For example, when reaching out to a healthcare CTO, Amplemarket may automatically reference your HIPAA compliance documentation and healthcare customer success stories.

Core Components of RAG Systems

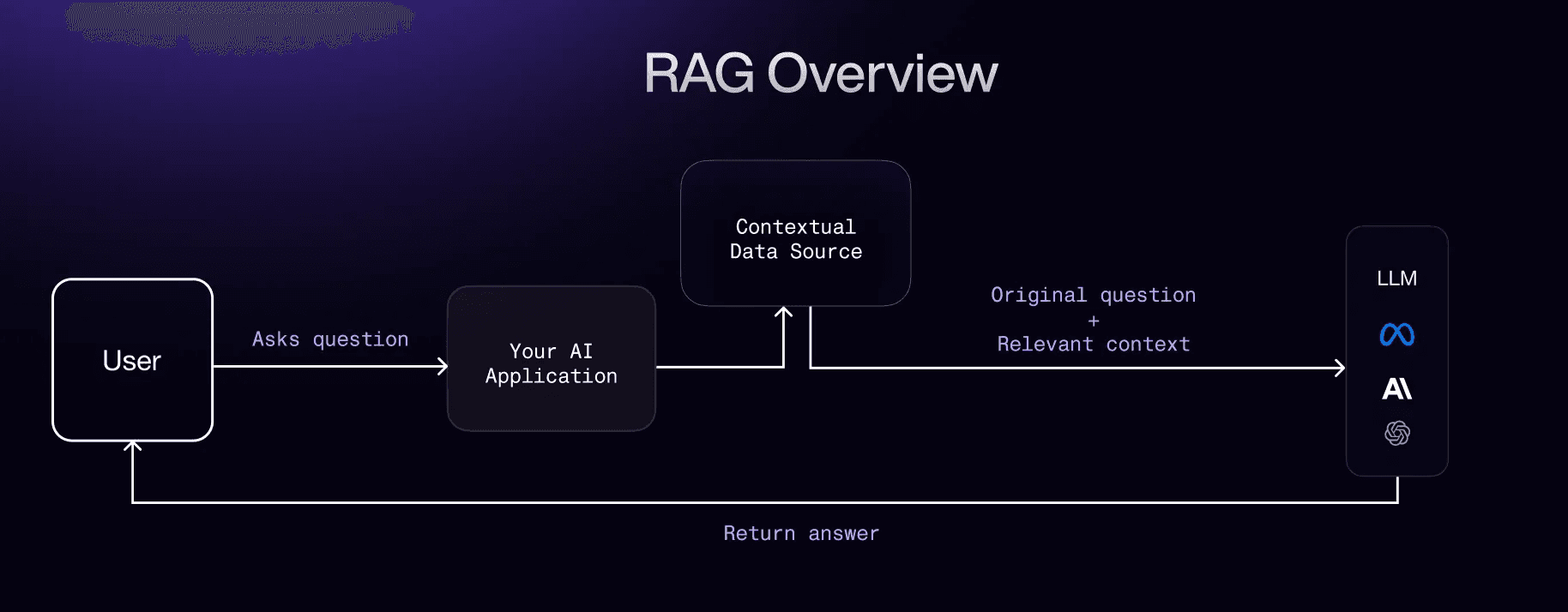

Now, let's break down a RAG system.

Query

This is the original prompt/question that triggers the RAG process. Depending on how you implement RAG capabilities in your product, this query can come from one of two ways:

User inputted query (if you have a chatbot/user input interface)

Event in your application (ie. AI agent kicking off a task) that can generate a query

Your AI Application

This is your AI product/feature which receives the query, and orchestrates the RAG process.

Engineering teams often use LLM frameworks (like Langchain/Llamindex) to abstract away the RAG orchestration, but your team may build in-house as well.

Contextual Data

Once your application receives the original query, it needs to search for relevant contextual data to help answer the query. This data can either live in your own vector database, or in your customers’ 3rd-party applications. We will dive into where and how this data is ingested further down in this article.

LLM

After your RAG process retrieves the relevant data, it will feed both the original query and the retrieved context to the LLM (ie. GPT-4o/Gemini/Claude), which will provide back a contextualized response that you will pass off to your user or back to the AI agents in your product.

Most of the heavy lifting in building a RAG application will be in the ingestion and/or retrieval of contextual data, which we’ll cover in detail.

Retrieving Contextual Data for RAG

Context Sources (from MVP to production-ready)

There are two key factors that will influence how you approach ingesting and/or retrieving data for RAG:

The type of data (structured, unstructured, semi-structured)

The amount of data (one file vs. hundreds of files)

Structured, Unstructured, and Semi-Structured Data

Business context is spread across your customers’ organizations, but regardless of where it lives, this data will fall into one of three buckets - structured, unstructured, and semi-structured.

These distinctions are important because you need to adapt your data retrieval strategy differently depending on the data type.

Unstructured Data

This data has no structured schema, and generally contains context that is not easily searchable. We’re seeing a lot of patterns in terms of where critical, unstructured context lives, including:

Sales or marketing emails (i.e. Salesloft, Outreach, HubSpot, and Mailchimp)

Past support chat conversations (i.e. Intercom, Zendesk, and Freshdesk)

Internal documents and files (Notion, Confluence, and files from Google Drive)

Retrieving the relevant data from an unstructured data source is hard, as you can imagine. You cannot query for specific sentences, messages, or paragraphs of context - finding relevant context requires you to perform semantic search on that unstructured data, which almost no 3rd-party APIs support.

As a result, you need to ingest all of that data ahead of time into a database, which can later be retrieved at query-time. We’ll explain this end-to-end process in the next section.

Structured data

This type of data has a schema (think database table). Some examples are:

Customer data from Salesforce/HubSpot

E-commerce inventory from Shopify/BigCommerce

Product usage analytics from Mixpanel/Amplitude

This data is easy to search through via traditional methods like SQL or via APIs directly, and there is rarely a need to ingest it upfront. Instead, you would need to give your AI agent product access to the 3rd-party APIs via function tools that your engineers will need to build.

Semi-structured data This term is used to describe a structured schema that contains unstructured data in specific properties. Examples of this could be large paragraphs of notes in a Salesforce field or a Jira ticket.

However for the purposes of RAG, you can largely treat this as a subset of structured data.

Now let’s walk through how your AI product will retrieve context for RAG, and as mentioned earlier, the approach will be different for structured and unstructured data.

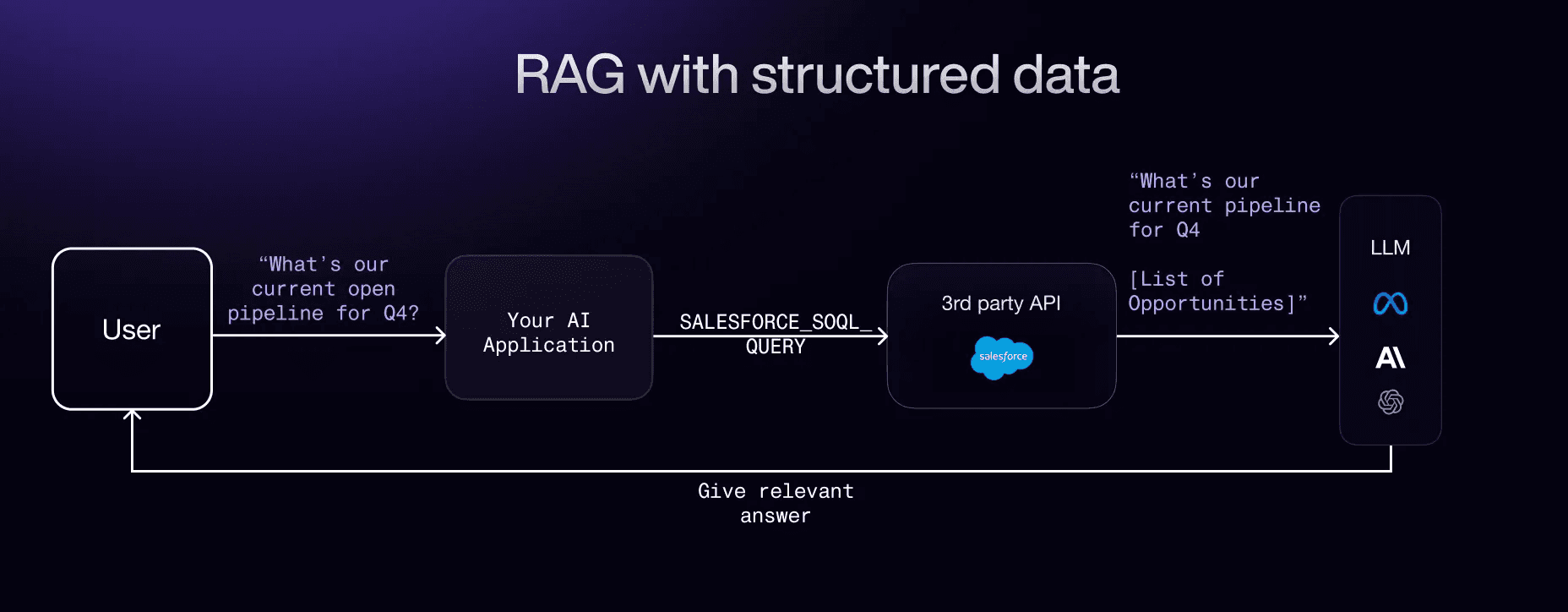

Structured Data for RAG

Structured data is generally easy to query for via APIs, so instead of doing the heavy lifting of ingesting all your users’ data into your own database up front, your AI agent can grab it at query time from the 3rd party API directly.

Here’s a diagram that shows what the flow looks like, which should look very familiar as we simply replaced the ‘contextual data’ piece with a 3rd-party data source.

In order to be able to interact with these 3rd-party APIs, your RAG application needs to ‘tools’ (yes, that’s the official term).

Tool calling in LLMs

Tools are functions your AI application can use to complete certain tasks, that your engineers have to build. For example, your engineers could build a tool that enables your AI application to search the internet via web browsing APIs, or in the case of example above, interact with 3rd-party APIs like Salesforce.

At query time, the LLM will ‘pick the right tools for the job’ in order to answer the question, such as searching for relevant data in a CRM. Once that data is retrieved via the 3rd-party API, it can then be injected into the prompt to answer the question and return it to the user.

Tool building challenge

The challenge with this approach is the unpredictable/unrestricted nature of users’ queries - they can ask the AI any question. As such, your engineering team needs to build hundreds of 3rd-party tools to ensure coverage for all the potential queries.

For example, just to get data from Salesforce, your team would need to build tools to:

Search for Leads

Search for Contacts

Search for Accounts

Search for Opportunities

Search for {Custom Objects}

This is why ActionKit was born - it’s a single tool that your engineering team can equip your AI application with to instantly give it access to dozens of integrations and hundreds of tools - check it out here.

Unstructured Data for RAG

Retrieving context from unstructured data requires a slightly different approach, and changes depending on the amount of external data your AI application needs access to.

In general, data is ingested ahead of time and in the background into your own database.

That said, AI products generally take a step-wise approach when it comes to incorporating external data into their RAG process.

Starting Simple

The two most common product design patterns when working with a low volume of external context are:

User inputs in the product

File upload

User Input

The easiest place to start is simply by offering users a way to provide written context for specific use cases in your product.

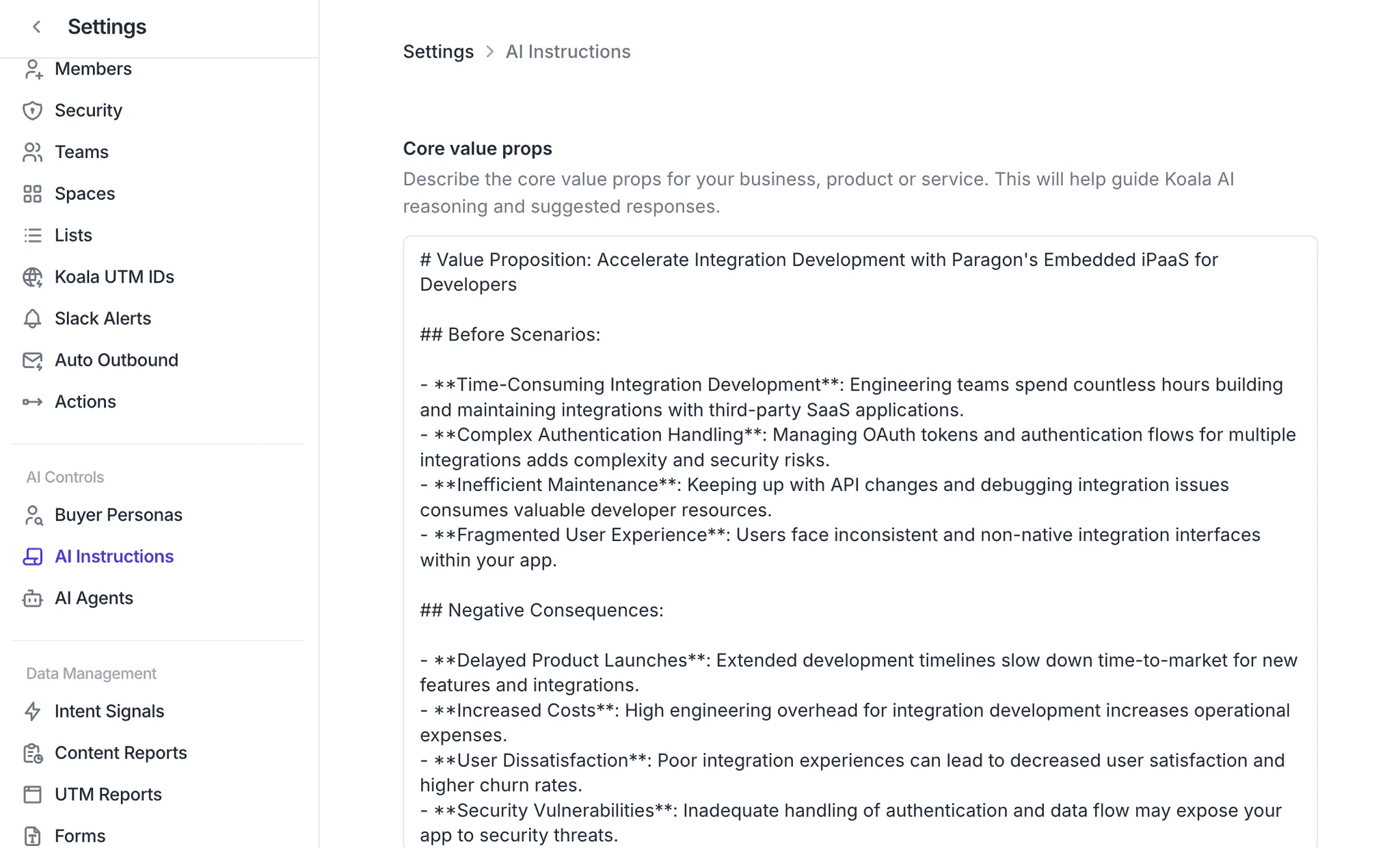

In AI sales products, this often comes in the form of a configuration page where users can provide context on their product, their customers, case studies, value propositions etc. Here’s an example from Koala, a website visitor identification platform that generates suggested emails to send to prospects.

In their case, when an email needs to be generated by their AI agent, it will retrieve this context to help craft the personalized messaging.

File upload

Similarly, many AI products also offer users the ability to upload files to achieve a similar outcome. Even OpenAI's ChatGPT is still barely scratching the surface with RAG, only offering file upload options from users’ computer, Google Drive, or OneDrive.

In this case, context is retrieved from the uploaded file to help answer the user’s query throughout the chat.

However in B2B, these two approaches are rarely enough. Context is spread everywhere across users’ organizations, making it impossible to type out all the relevant context or upload hundreds, if not thousands of relevant files. In addition to that, not everything can be uploaded (such as context from message threads in Slack or Teams).

Even if users could manually provide context, that context may become stale and outdated very quickly. This brings us to the production-ready implementation - integration-enabled data ingestion.

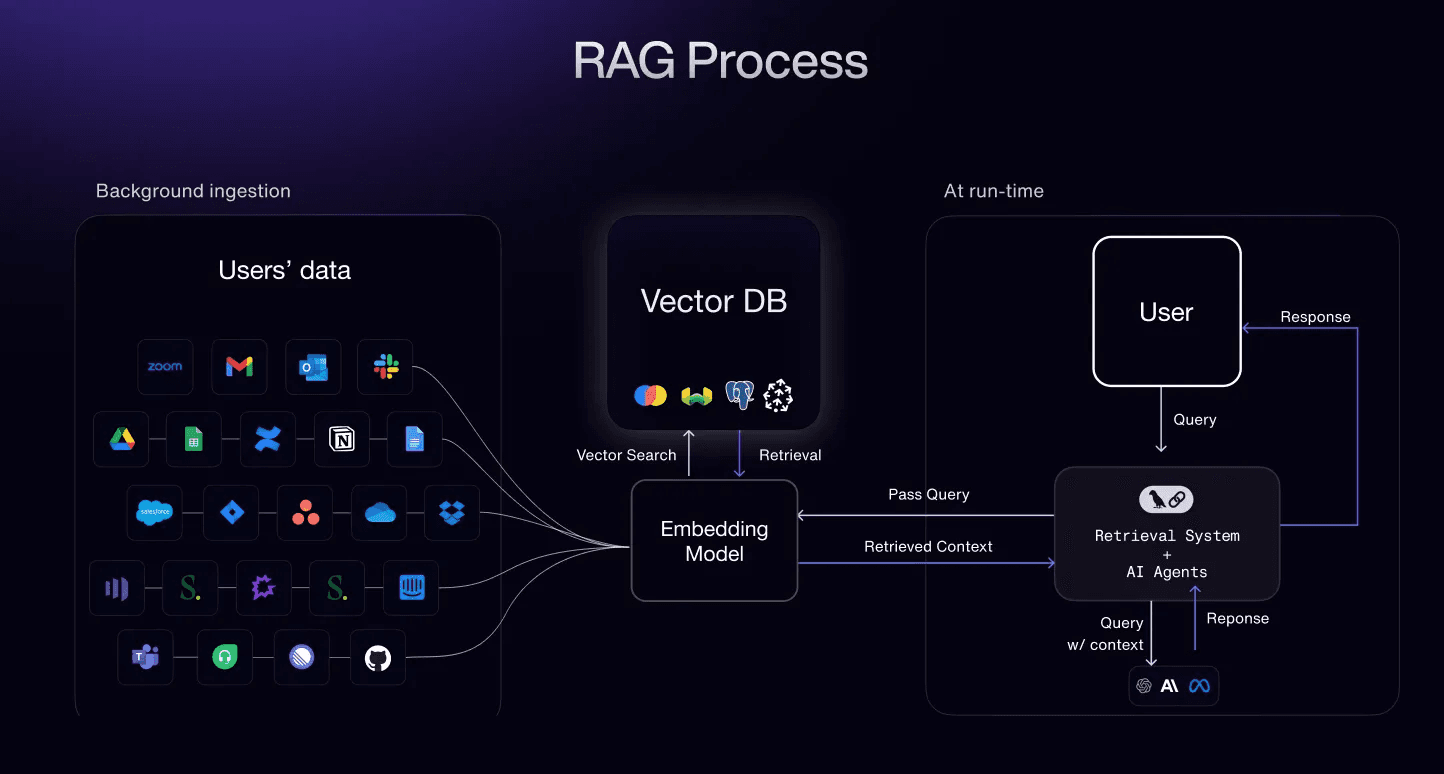

Enterprise RAG: Data Ingestion Pipelines

AI companies are realizing just how important having sufficient business context is, which is why most are racing to build integration connectors and data pipelines to ingest all of their users’ fragmented data.

Not only does this allow them to ingest much more data than the previous implementations of user inputs/file uploads, this also makes it possible to continually update the database with new data or changes to existing data.

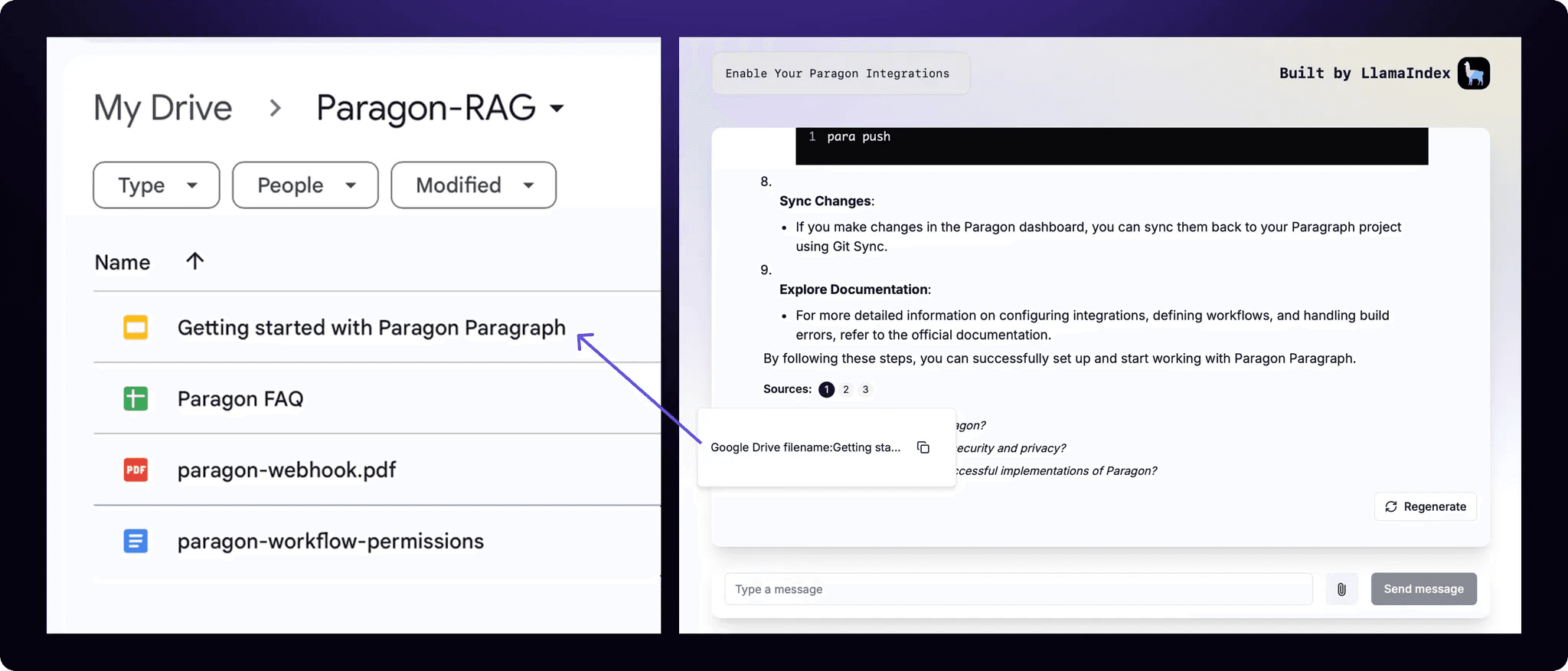

If you’re curious, this tutorial and demo video series our developer advocate put together showcases the process for building a RAG AI chatbot that uses Paragon to ingest context from Google Drive, Slack, and Notion.

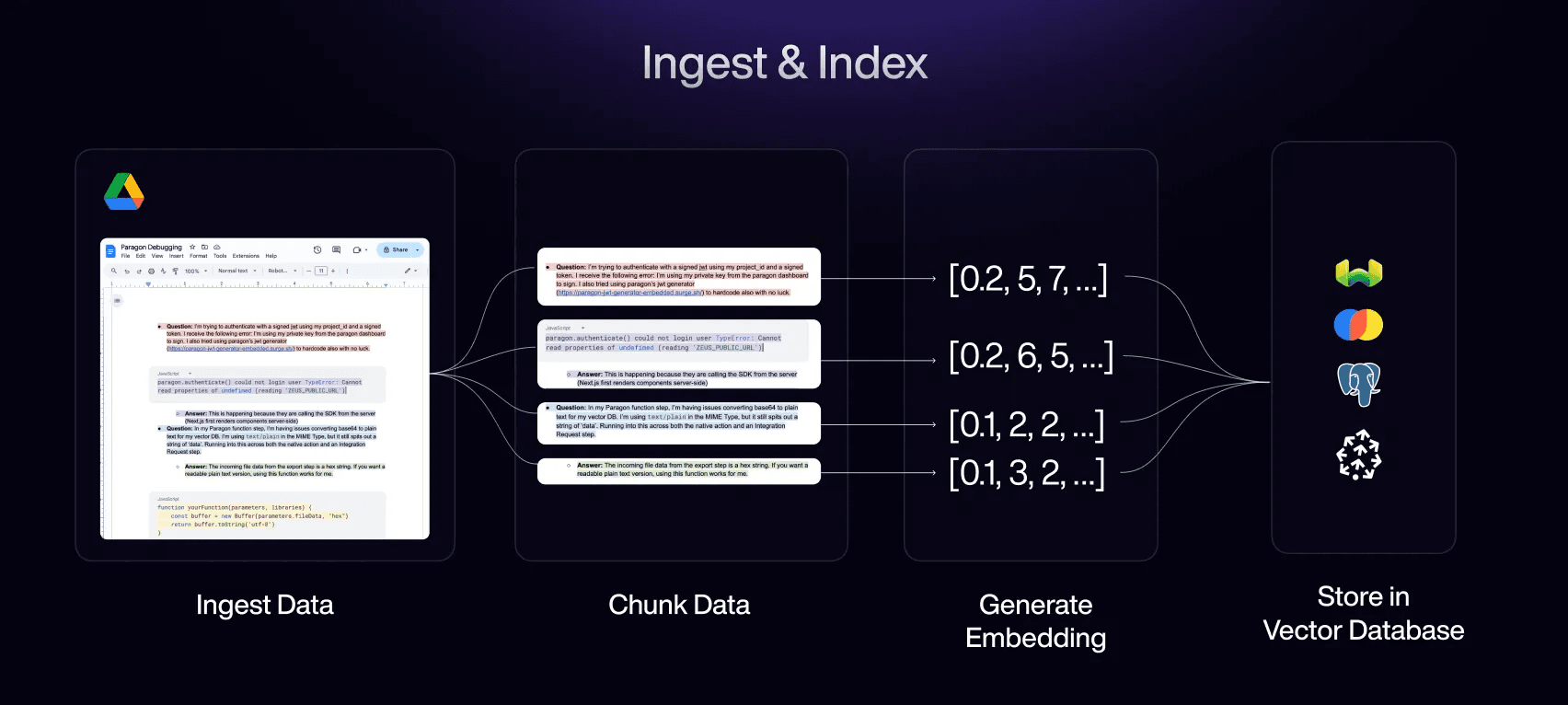

Indexing: Processing, Chunking, and Storing the Data

Once you get the files and data, you need to store it in a vector database in a way that is easily and accurately retrievable at query time. This end-to-end process is commonly referred to as indexing.

1. Pre-Processing: Making Content RAG-Ready

Think of this as making the data you’re ingesting ready for RAG. In some cases, this step is unnecessary as the data comes in plain text, but here are examples of scenarios in which pre-processing is required:

With PDFs: Convert content into machine-readable text

With webpages: Parse and remove unnecessary HTML formatting

Extract metadata like the document title and last update date

2. Chunking: Breaking Down Documents

Chunking is the process for breaking down a large unstructured data set (ie. a 100-page PDF) into smaller, well, chunks. How you decide to chunk the data is up to you, and will have major implications on retrieval accuracy. Chunks that are too small lose context, while chunks that are too big will introduce a lot of noise. Here are just a few examples of how different content types would require different chunking strategies:

For a Slack conversation about a bug fix, you might keep the entire thread as one chunk to maintain context.

For a long product manual, you might split it by sections but ensure feature descriptions stay together.

For API documentation, you might keep each endpoint's documentation as a single chunk.

There’s a lot more that goes into building and optimizing a chunking strategy that we won’t get into today, but we cover it in this webinar here.

3. Embedding Generation: Making Text Searchable

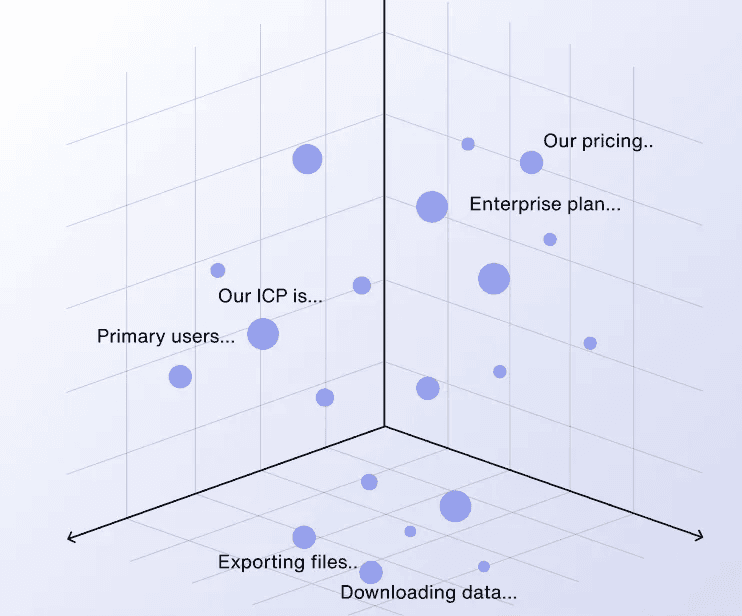

The next step is to generate an embedding from the text using an embedding model. Think of embeddings as coordinates in space (vectors) that map the semantic meaning of the text in a chunk.

This is important because it enables your AI application to search for relevant context based on meaning, and not keywords. For example, when someone asks "How do I export my data?", it would be able to find the right docs even if the documentation uses different words like "download," "backup," or "extract."

4. Storing Embeddings in a Vector Database

Once you have the embeddings, they will be stored in the vector database. Below is an illustration of what data in a vector database would look like, however in reality, there would be far more than 3 dimensions.

The importance of metadata

You need to ensure that anything you want to surface to your users about that data is stored as metadata alongside each embedding.

A very common product design patterns here is to show users what data source the context came from (ie. Google Drive, Slack, etc.), and provide links to the specific document/message.

To illustrate, below is a screenshot from an AI chatbot we built from our RAG tutorial series. You can see it referencing that the context came from Google Drive, and includes the name of the file and a clickable link to the file.

We hosted a webinar recently that walks through all the best practices for data ingestion and indexing - watch the recording here.

Okay, so we covered the hard part of how data ingestion and indexing process works - now let’s talk about what happens at query-time.

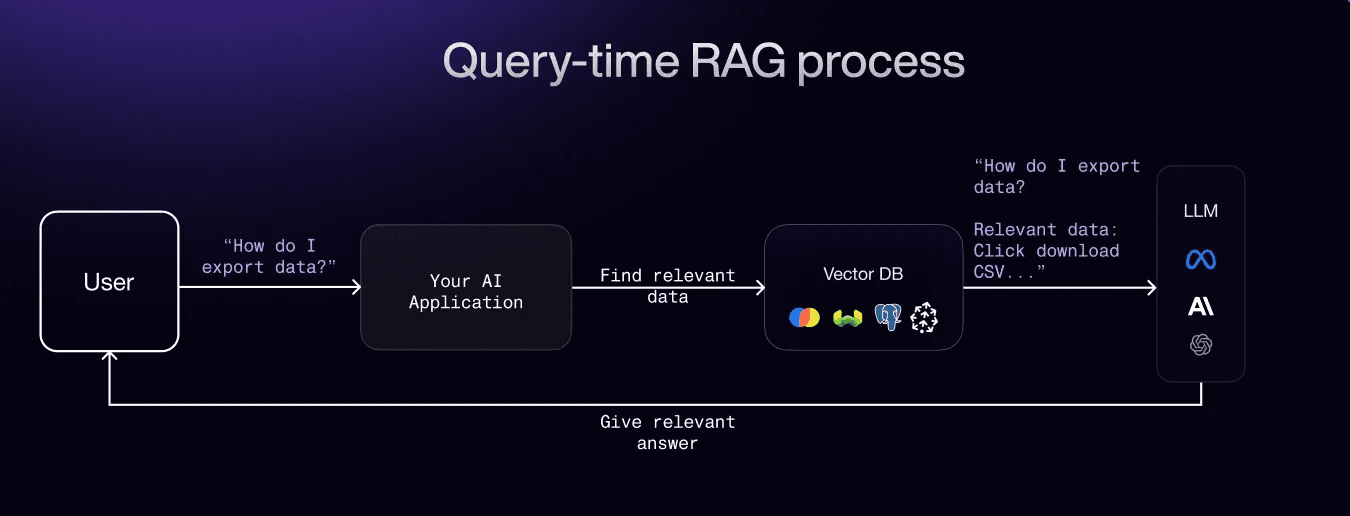

Query-time Process for RAGing Unstructured Data

For simplicity, assume your product is an AI chatbot and your users can ask it questions about their own data.

Again, this should look familiar to the previous query-time diagrams, but instead of making 3rd-party API calls to retrieve data, your AI application will be searching for context within your own vector database where all that ingested data is stored.

1. The Query Triggers the Retrieval Process

First, a query is created - whether it be by your user through a chat interface, or auto-generated by your product within a user’s workflow.

In this case, the User is asking “How do I export my data” to the AI chatbot.

2. Retrieving Data from the Vector Database

Once the query is ‘received’, the next step is to go look for any relevant data to that query that would be helpful in answering the query.

To make this semantic search possible, the same embedding model that was used to generate embeddings from the source data must be used on the your user’s query. That way, the vector database can look for the ‘closest’ vectors based on semantic meaning, and return those embeddings as the context.

For example, if the vector database contains documentation that talks about ‘downloading data’, or ‘exporting CSVs’, etc., it will likely retrieve that data to help answer this question of "How do I export my data?" even if there is no keyword overlap.

3. Passing Query + Retrieved Context to LLM

This works exactly the same way as with structured data. The query and the retrieved context is passed to the LLM for a response.

That’s it! You now know how the end-to-end ingestion, indexing, and retrieval process works in a RAG application.

Bonus: Permissions in RAG

One challenge with RAGing your users’ external data is permissions. A common product design pattern is to have one of your customers’ admins authenticate all the 3rd-party applications their organizations use, on behalf of all the other employees.

But with this approach, unless you build a dedicated permissions system to persist who has access to which documents/data, everyone in a company will have access to everyone else’s data.

That is a very important and deep topic that deserves its own focus - we wrote about it at length here.

Wrapping Up

RAG transforms how companies build AI applications - by connecting LLMs to your customers’ external knowledge and data. You should now have a good high-level understanding of how RAG works, so you can communicate effectively with your engineering team and build the next wave of successful, production-ready AI applications.

If you’re interested in learning how Paragon has enabled AI companies like AI21, Copy.ai, and many more scale their integration connectors and external data ingestion pipelines, book a demo here.